AAQEP Accreditation

Standard 1 Aspect D

Standard 1d: Evidence shows that, by the time of program completion, candidates exhibit knowledge, skills, and abilities of professional educators appropriate to their target credential or degree, including: Assessment of and for student learning, assessment and data literacy, and use of data to inform practice

Data Sources & Analysis:

Data Source 1

LEE 224 Case Study Rubric

Perspective Captured from Data Source:

Instructor

Rationale for using Data Source:

LEE 224 Assessment and Development of Reading Abilities is the first of three courses

focused on using assessment to inform literacy instruction with K-12 students. The

Case Study assignment is the final assignment in this class, where candidates administer

a variety of literacy assessments to an individual struggling reader in K-12, analyze

the assessment results, use the results to develop an individualized instructional

plan, and reassess to gauge growth. The students prepare a case study report that

details the assessment tools and results, provides an analysis of the results before

and after individualized instruction, and provides instructional recommendations.

Specific Elements of Data Source:

LEE 224 Case Study Rubric

| - | Exemplary (4) | Accomplished (3) | Developing (2) | Beginning (1) |

|---|---|---|---|---|

| Results | All assessment results reported clearly, concisely, and accurately. | Most quantitative and qualitative assessment results reported clearly, concisely, and accurately. | Some quantitative and qualitative assessment results reported clearly, concisely, and accurately. | Few quantitative and qualitative assessment results reported clearly, concisely, and accurately. |

| Analysis | All assessments analyzed accurately, thoroughly and competently | Most assessments analyzed accurately; some analyses lack depth | Some assessments analyzed accurately; most analyses lack depth | Few assessments analyzed accurately; few analyses are through |

| Strengths/ Weaknesses |

All needs and strengths targeted. Summary is supported by multiple and varied assessments | Most needs and strengths targeted. Summary is supported by multiple and varied assessments | Some needs and strengths targeted; summary is supported by single assessments | Few needs and strengths targeted; summary does not refer to assessments |

| Instructional Recommendations | 2-3 recommendations provided; all accurately address needs and build on strengths; all appropriately supported; at least 1 activity for home |

2-3 recommendations provided; most accurately address needs and build on strengths; most appropriately supported; at least 1 activity for home |

Incomplete recommendations; some accurately address needs and build on strengths; some appropriately supported | Incomplete recommendations; few accurately address needs and build on strengths; few appropriately supported |

Definition of Success for Each Element:

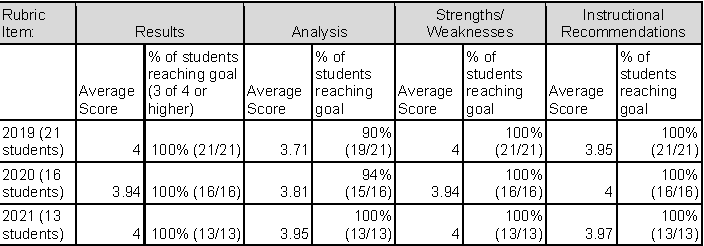

Reports are evaluated and scored using a rubric (Case Study Rubric), with scores ranging

from Exemplary (4/4), Accomplished (3/4), Developing (2/4), and Beginning (1/4) based

on the ability to administer, score, and analyze assessment tools and to use assessment

results and literacy research to guide the design of differentiated instruction for

struggling readers.

A score of ≥ 3 in each category is considered to have met the learning outcome. 75% of students are expected to meet the learning outcome.

Displays of Analyzed Data

Link to Full Dataset:

Interpretation of Data:

Findings from 2019- 2021 demonstrate that, overall, program candidates scored well

above the programmatic target score of 3 on each of these rubric components. This

is the first of three courses where students have the opportunity to do this type

of analysis. This suggests that candidates are meeting the program goals at the end

of this course to continue the work of using data to inform practice.

Data Source 2

Reading, Language, Literacy Specialist Credential Comprehensive Exam Item: LEE 224

Perspective Captured from Data Source:

Program Faculty

Rationale for using Data Source:

LEE 224 is designed as the initial literacy assessment course in the sequence of courses

leading to the Reading/Language Arts Specialist credential and/or the Master of Arts

in Education with a concentration in Reading/Language Arts. The major focus of the

course is to provide candidates with an understanding of the general and specific

concepts related to literacy assessment, as well as an understanding of how to conduct

in-depth literacy diagnoses, develop literacy strengths, and address literacy weaknesses.

Candidates learn to administer and interpret formal, informal, and curriculum embedded

assessment measures. Students will reflect upon the theory they are reading and discussing

regarding assessment procedures and then use various tools in practical applications.

The comprehensive exam is a culminating experience option for students who are pursuing the Master's Degree. Prior to the exam, students receive a list of potential questions they then prepare to answer. During the exam, students are given five of these questions and must select and respond to three. Due to changes in program leadership, we currently only have data from Summer and Fall 2020 comprehensive exams.

Important to note is that not all students who pursue the Reading Added Authorization or Reading Specialist Credential pursue the Master's Degree. Still, annually, 90-95% of students enrolled do pursue the Master's Degree, and so we believe that, for now, this is an appropriate data source to use.

Specific Elements of Data Source

LEE 224A: Describe the formal and informal assessments a classroom teacher might use

to identify students’ literacy challenges in the following areas: (a) Comprehension

and (b) Word Recognition or Decoding Be specific in naming assessments and explain

the potential value of each technique in identifying teaching points.

LEE 224B: Review the attached Running Record from a first grade student. Analyze the results and identify the student’s strengths and weaknesses in comprehension and decoding. Then devise a brief instructional plan for the student that addresses both decoding and comprehension development.

Exam responses are scored using the Reading/Language Arts Comprehensive Exam Rubric

Definition of Success for Each Element:

An average score of 2 is considered passing. The students’ exam scores are also submitted

to the university as part of the program’s Student Outcomes Assessment Plan (SOAP).

For the SOAP, a mean score of ≥3 is considered to have met the learning outcome. Annually,

75% of students are expected to meet the learning outcome

Displays of Analyzed Data

Tables of overall scores

| LEE 224A Comp Exam Question |

Rubric Average (out of 4) | % meeting program goal (≥3) | % passing (score of ≥2) |

|---|---|---|---|

| Summer & Fall 2020 20 Students taking CE 7 Students responded to 224A |

2.81 | 43% (3/7) | 100% (7/7) |

| LEE 224B Comp Exam Question |

Rubric Average (out of 4) | % meeting program goal (≥3) | % passing (score of ≥2) |

|---|---|---|---|

| 2020 20 Students taking CE 3 Students responded to 224B |

3.07 | 67% (2/3 students) | 100% (3/3) |

Tables of Individual Scores

| LEE 224A Comp Exam Question 2020: 20 students took exam, 7 students responded to 224A |

CE Scores (out of 4): |

|---|---|

| Student 1 | 3.7 |

| Student 2 | 2.8 |

| Student 3 | 3.3 |

| Student 4 | 2.5 |

| Student 5 | 3.2 |

| Student 6 | 2.2 |

| Student 7 | 2 |

| Average | 2.81 |

| % meeting program goal (≥3) | 43% |

| % passing (score of ≥2) | 100% |

| LEE 278B Comp Exam Question 2020: 20 students took exam, 3 students responded to 224B |

CE Scores (out of 4): |

|---|---|

| Student 1 | 2.8 |

| Student 2 | 3.1 |

| Student 3 | 3.3 |

| Average | 3.07 |

| % meeting program goal (≥3) | 67% |

| % passing (score of ≥2) | 100% |

Link to Full Dataset:

Interpretation of Data:

Overall, data demonstrate that candidates are successfully able to discuss informal

and formal literacy assessments and analyze a common literacy assessment (running

record) and make instructional recommendations. As the table above shows, all candidates

passed these items on the exam.

However, only 43% of the candidates demonstrated meeting the program goal for item a, and only 67% demonstrated meeting the learning outcome for item b. This is an area of concern that we will need to address in the future.

It is additionally problematic that we only have data from two iterations of the exam, and so we will need to continue to analyze the data moving forward.

Data Source 3

Reading, Language, Literacy Specialist Credential Completer Survey

Perspective Captured from Data Source:

Candidate

Rationale for using Data Source:

Beginning in Fall 2020, the RLLSC Program began administering a survey to candidates

upon their completion of the program as a way to learn more about their perceptions

of how well the program prepared them.

Using the RLLSC Completer Survey allows us to capture candidates’ perceptions of how well the program prepared them to use a variety of literacy assessments in their educational roles.

Specific Elements of Data Source

Definition of Success for Each Element:

Programmatically, our goal is for candidates to rate the program at a 4 or a 5 within

each area.

Displays of Analyzed Data

Link to Full Dataset:

RLLSC Program Completion Survey Data (Names Redacted)

Interpretation of Data:

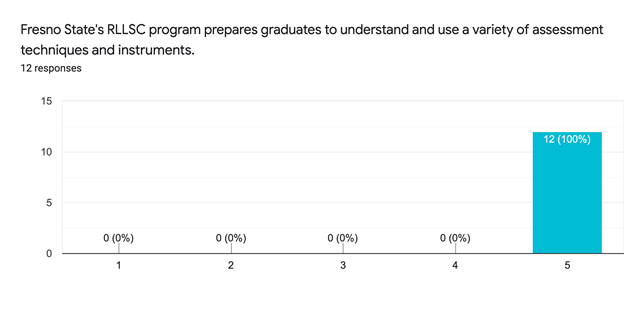

Because the program only began administering the completion survey in Fall 2020, we

only have one cycle of data to analyze. Of the 12 number of completers surveyed, 12

provided responses for a response rate of 100%.

100% of the completers who responded indicated they strongly agreed that the program prepared them to understand and use a variety of assessment techniques and instruments

Next Steps:

While students feel successful and scores triangulate with their perspectives, we

want to continue to develop their data analysis skills. In order to address what we

found, we will align the data analysis tasks across the three clinical courses (LEE

224, LEE 230, LEE 234) so that the language aligns across courses.

Additionally, two data sources rely on the LEE 224 course and as a program, we would like to have data sources from additional points in the program. We plan to continue to administer the completer survey each year to gather more data on students’ perspectives.

Despite the high student self-ratings in the completer survey, the 224 course assignment, and overall pass rate of the comprehensive exam questions, students are not meeting our program goals for the comprehensive exam questions for 224A and 224B. The low comp exam scores indicate students are unable to identify formal and informal literacy assessments and/or make connections to research to support the use of these assessments. One way to address this is to add a category to the 224 Case Study rubric to include “Research” and require students to discuss the research behind the formal and informal assessments used in the Case Study assignment.